Hi @David Harper CFA FRM

In R39-P2-T5 Tuckman Ch9 Model 1 - Simulation:

Why do we always scale the random value by SQRT(1/12) for every month when calculating dw?

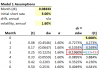

Current calculation in P2.T5.Tuckman_Ch9.xlsx for the 3 month rate:

Whereas intuitively I thought we should be scaling by SQRT(0.25) which is the size of a 3 month step.

The tree values make more sense to me where each node's values depend on the preceding values so there's an evolution over time, whereas in the simulation it seems we don't take the passing of time into consideration and I'm not sure why.

Edit: Additional question - assuming the calculation above is correct, why do we show the (t) [or I think the video said it should be called dt] column in the table if we don't use it in the calculations?

Thanks

Karim

In R39-P2-T5 Tuckman Ch9 Model 1 - Simulation:

Why do we always scale the random value by SQRT(1/12) for every month when calculating dw?

Current calculation in P2.T5.Tuckman_Ch9.xlsx for the 3 month rate:

Whereas intuitively I thought we should be scaling by SQRT(0.25) which is the size of a 3 month step.

The tree values make more sense to me where each node's values depend on the preceding values so there's an evolution over time, whereas in the simulation it seems we don't take the passing of time into consideration and I'm not sure why.

Edit: Additional question - assuming the calculation above is correct, why do we show the (t) [or I think the video said it should be called dt] column in the table if we don't use it in the calculations?

Thanks

Karim

Last edited: